DRAM memory module H5UG7HME03X020RDDR5

Add to favorites

Compare this product

Characteristics

- Type

- DRAM, DDR5

- Memory capacity

16 GB, 64 GB, 128 GB

Description

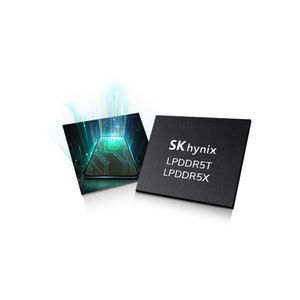

Ultimate DRAM for New Horizons of High-end Memory

World’s First HBM3 Developed in October 2021

In just 15 months since launching HBM2E mass production, SK hynix solidified its leadership in high-speed DRAM by developing an HBM3, the latest in high-bandwidth memory for cutting-edge technologies across datacenters, supercomputers, and AI.

Advanced Thermal Dissipation

HBM3 runs at lower temperatures than HBM2E at the same level of operating voltage, enhancing stability of the server system environment. At equivalent operating temperatures, SK hynix HBM3 can support 12-die stacks or 1.5x capacity than HBM2E, and 6Gbps I/O speeds for 1.8x higher bandwidth. With greater cooling capacity for the same operating conditions thus, SK hynix delivers on its Memory ForEST* initiative.

Performance Boost

SK hynix HBM3, with 1.5x capacity than HBM2E from 12 DRAM die stacked to the same total package height, is fit to power capacity-intensive applications such as AI and HPC. A single cube can yield up to 819GB/s in bandwidth, while an SiP (System-in-Package) with six HBM chips on the same silicon can achieve up to 4.8TB/s in support of exascale demands.

On-die ECC

SK hynix HBM3 also features a robust and custom-designed on-die ECC (Error Correcting Code), which uses pre-allocated parity bits to check and correct errors in data received. The embedded circuitry allows DRAM to self-correct errors within cells, significantly enhancing device reliability.

Catalogs

No catalogs are available for this product.

See all of Hynix‘s catalogs*Prices are pre-tax. They exclude delivery charges and customs duties and do not include additional charges for installation or activation options. Prices are indicative only and may vary by country, with changes to the cost of raw materials and exchange rates.